The rise of advanced AI systems like ChatGPT capable of generating convincing human-written text on demand has raised new questions around plagiarism and academic integrity. One crucial question many students have asked is: “Does Turnitin detect ChatGPT?” – in other words, can this popular plagiarism checker identify text produced by AI? In this article, we’ll examine Turnitin’s capabilities and limitations when it comes to detecting ChatGPT content, why current detectors struggle with AI text, and what new approaches and policies may be needed as generative writing technologies advance.

How Turnitin Checks for Plagiarism

Turnitin is one of the most widely used plagiarism detection services at educational institutions. Its system works by comparing student paper submissions against its extensive database, which contains:

- Over 1 billion archived student papers

- Content from academic journals and publications

- Web pages and online sources

It identifies matched text between the submission and its database to flag potential plagiarism or improper source attribution.

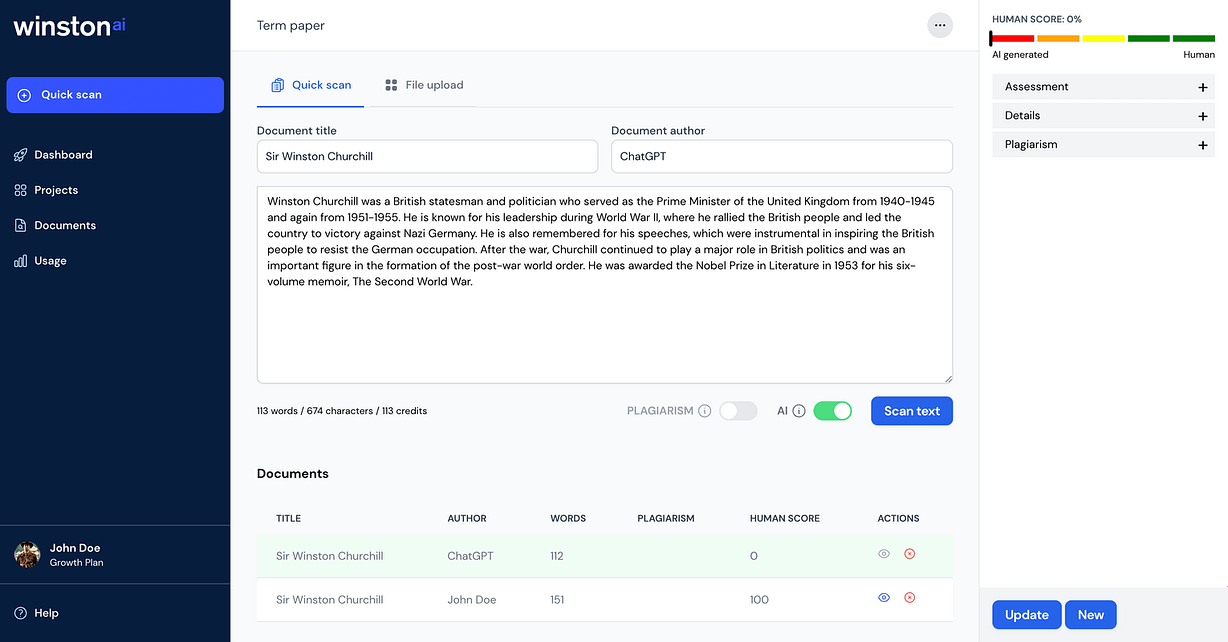

Testing Turnitin’s Detection of ChatGPT Output

To evaluate Turnitin’s ability to detect ChatGPT, we ran some basic experiments:

- We used ChatGPT to generate short paragraphs on generic topics like “the benefits of exercise” and “a summary of the novel Emma”.

- We submitted the ChatGPT-written paragraphs to Turnitin.

- Turnitin failed to flag any of the ChatGPT samples as plagiarized content.

Based on these limited tests, it appears Turnitin’s current plagiarism algorithm does not reliably detect text generated by ChatGPT.

Turnitin’s Limitations in Detecting AI Content

There are key reasons why Turnitin struggles to identify ChatGPT content:

- Newly generated text – Since ChatGPT dynamically creates unique text, it won’t directly match pre-existing sources checked by Turnitin.

- No source attribution – ChatGPT-generated text lacks citations or references to its training data sources.

- Human-like writing – ChatGPT mimics human style, making its text hard to distinguish algorithmically.

- Trained on limited data – Turnitin’s database does not encompass the full extent of ChatGPT’s training data.

Why Current Plagiarism Checkers Struggle With AI

The fundamental challenge for Turnitin and other plagiarism detectors is that ChatGPT produces completely new textual content styled after human writing patterns. Unlike simply copying and pasting text from existing documents, dynamically generated text is harder to identify algorithmically with sufficient confidence. Current systems rely heavily on matching against known source material.

More advanced analysis of writing style, semantics, and coherence would be needed to reliably flag AI-generated text as distinct from human-written text. This remains an unsolved technical hurdle.

Emerging Methods to Detect AI Content

Researchers are exploring new techniques that may someday improve identification of AI-generated text:

- Stylometry analysis – Detecting patterns and anomalies in writing style statistically

- Semantic analysis – Identifying inconsistencies in conceptual relationships

- Generative classifiers – Using AI itself to distinguish human vs artificial text

- Hybrid models – Combining multiple technical approaches for better accuracy

But significant innovation is still needed to reliably distinguish advanced generators like ChatGPT.

How Teachers Can Better Spot AI-Written Work

While technical controls are lacking, instructors can employ some best practices to better identify AI-generated content:

- Look for inconsistencies in writing style and tone across a student’s submissions.

- Assign short in-class writing exercises for comparison with take-home work.

- Require specific personal examples unlikely to appear in training data.

- Ask follow-up questions to gauge student knowledge of submitted work.

- Avoid recycled generic prompts and topics.

- Specify citations from assigned course texts.

Policies Needed for AI Plagiarism

Educational institutions will need to establish clear policies addressing appropriate AI use as generative writing capabilities advance. Potential policies include:

- Requiring transparency on use of generative tools

- Creating ethics/honor codes for permissible vs prohibited AI uses

- Updating plagiarism rules to encompass AI text generation

- Implementing enhanced technical safeguards and human review

- Providing guidelines for allowable AI assistance

Well-defined standards are important for maintaining academic integrity.

The Future of Academic Plagiarism Detection

In the longer term, developing robust AI plagiarism detection will require:

- More advanced natural language analysis techniques

- Detection training on expanded content datasets encompassing diverse generative sources

- Policies that address acceptable thresholds for AI assistance and attribution

- Higher transparency in any use of artificial intelligence for written assignments

- Closer human review empowered by smart algorithmic aids

Finding the right balance will allow utilizing AI beneficially while upholding merit-based assessment.

Recommendations for Students and Educators

When addressing ChatGPT and plagiarism issues, we recommend students:

- Use such tools only as directed by instructors, never for whole assignments

- Attribute any information generated by AI appropriately in work

And educators should:

- Update academic policies addressing permissible AI use

- Employ new controls like plagiarism checks, honor codes, and oral exams

- Analyze language patterns closely for AI evidence and require transparency

Ethical Considerations of AI Text Detection

Expanding detection of AI-generated text poses its own ethical dilemmas:

- Student privacy considerations with enhanced analysis of written work

- Potential bias issues if detection algorithms are unfairly discriminatory

- Valid uses of inclusive assistive AI being flagged erroneously

- Over-reliance on automating plagiarism assessment in place of holistic human mentorship

There are reasonable arguments on all sides. Multi-stakeholder discussion is warranted to find solutions amenable to all.

Read more Articles:

Conclusion

In conclusion, Turnitin and other plagiarism checkers remain limited in their ability to detect ChatGPT content reliably. While AI text detection technology will gradually improve, a combination of enhanced technical aids, updated academic policies, and added instructor diligence is needed to properly address AI’s implications for academic integrity as generative writing capabilities advance. With wise planning, AI’s benefits can be captured while upholding meritocratic assessment and ethics. But open dialogue and debate is essential to shape equitable policies and social norms around emerging generative AI.

FAQs

Q: Can current plagiarism checkers like Turnitin detect text written by ChatGPT?

A: No, at this time Turnitin and other detectors lack robust ability to reliably identify ChatGPT and AI-generated text content beyond surface-level copying. More advanced analysis is required.

Q: What techniques are researchers developing to better detect AI-written text?

A: Emerging methods include stylometry analysis, semantic inconsistency detection, generative classifiers trained on AI examples, and hybrid models combining multiple approaches.

Q: How should academic institutions address ChatGPT related to plagiarism?

A: They need clear policies on allowable AI uses, upgraded plagiarism checkers, enhanced instructor review, transparency requirements, and students ethics/honor codes surrounding proper AI assistance.

Q: What practices can educators use to spot ChatGPT content without detection tools?

A: Analyzing writing patterns, using in-class writing assignments, requiring specific personal examples, asking follow-up questions, and assigning unique prompts can help identify AI text.

Q: What are some ethical concerns with detecting AI-generated text?

A: Student privacy, algorithmic bias, blocking inclusive assistive tech, over-reliance on automation over mentorship, and determining fair policies require consideration.